Slonk: Slurm on Kubernetes for ML Research at Character.ai

Today we’re sharing a snapshot of Slonk (Slurm on Kubernetes), the system we use internally to run GPU research clusters at Character.ai.

Although this is not a fully supported open-source project, we’re publishing the architecture and tooling behind it because it solves one of the thorniest problems in machine learning infrastructure: giving researchers the productivity of a classic High-Performance Computing (HPC) environment while leveraging the operational benefits of Kubernetes.

The Problem: Bridging Two Worlds

When we started scaling our training infrastructure, we faced a familiar dilemma. Researchers wanted SLURM - a reliable scheduler with fair queues and gang scheduling. The infra team needed Kubernetes for orchestration, health checks, and autoscaling. Essentially, researchers needed simplicity and speed; operations needed stability and efficient GPU sharing. Slonk gives us both:

- Familiar SLURM UX for users (

sbatch,squeue, partitions, preemption, FIFO + backfill) - Kubernetes as the control plane for resiliency, autosurgery, and integration with the rest of our services

- A uniform way to manage heterogeneous clusters and clouds so we can move capacity between research and serving without drama

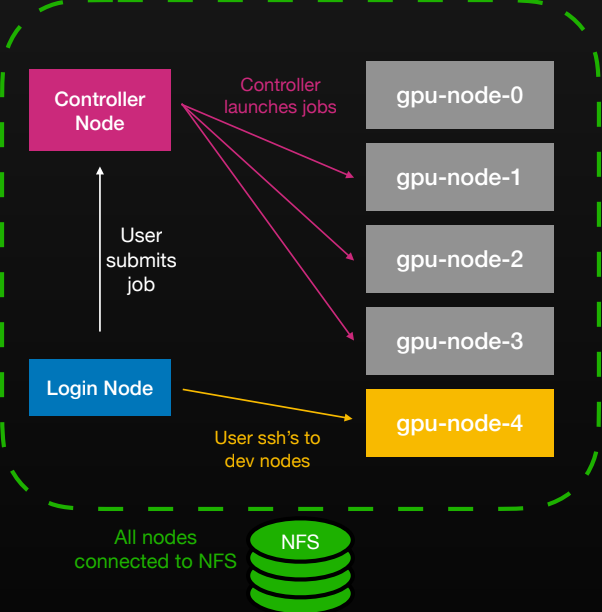

The day-to-day researcher workflow is classic HPC: SSH to a login node, edit code on a shared NFS home, submit a job, and tail logs; Slonk’s controller schedules and allocates resources, and results land back on the same volume.

Architecture: Containers All the Way Down

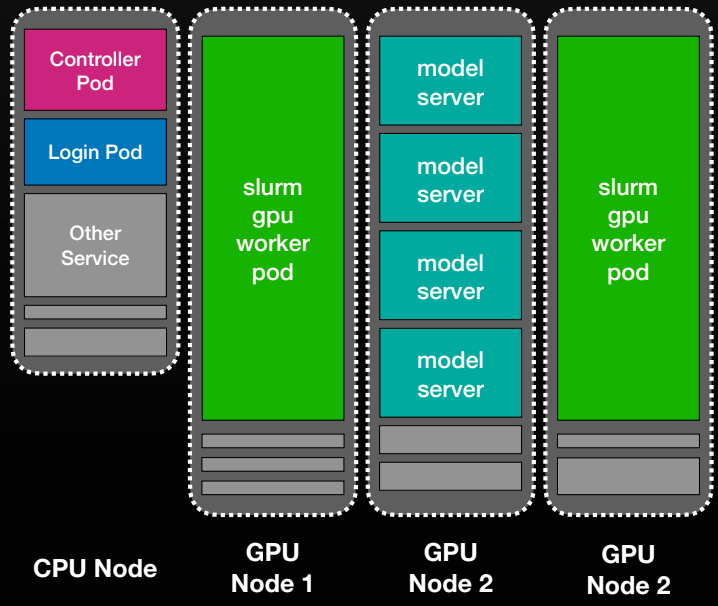

At its core, Slonk treats SLURM nodes as long‑running Kubernetes pods. We run three StatefulSets - controller, workers, and logins - so each SLURM “node” maps directly to a pod (gpu-node-0, gpu-node-1, …).

The controller pods run slurmctld; worker pods run slurmd; login pods provide SSH access and a familiar research environment. Other workloads can co‑exist on the same physical machines.

Some key details that make this seamless:

- Base image as mini‑VM: Each pod includes a lightweight init layer with SLURM daemons, SSH, and system services. Config is injected via ConfigMaps, and

/homeis a persistent NFS volume. We git‑sync shared scripts for prologs, epilogs, and auth helpers to keep nodes consistent. - Authentication & networking: SSO maps to Unix accounts through LDAP, and each node gets its own Kubernetes Service to avoid DNS fan‑out issues at large scale.

- Observability & health: Logs and metrics flow through open‑source monitoring tools. A custom goal‑state operator manages node health, running GPU and network checks and auto‑remediating failures.

- Topology awareness: SLURM’s network‑aware scheduler ensures large jobs get GPUs close together in the same fabric segment.

The result is a system that feels like a traditional supercomputing cluster - researchers still use sbatch and shared /home directories, while leveraging Kubernetes’ resilience, automation, and portability. For TPUs and slice-based hardware, we exploit SLURM’s network topology awareness so allocations are co-located; with capacity pre-staged in the cluster, jobs start in seconds rather than minutes.

Technical Challenges

- Reconciling Two (+One Hidden) Schedulers: the hardest part isn’t containerizing SLURM - it’s synchronizing its resource view with Kubernetes’. We built alignment utilities that continuously reconcile pod states, ensuring SLURM and Kubernetes agree about what’s alive, drained, or preempted.

- Health Checks at Scale: large training runs fail for subtle reasons: a bad GPU, a flaky NIC, a misconfigured NVLink. Slonk includes a suite of health checks - GPU, network, and storage- that run before, during, and after jobs. Bad nodes get drained and recycled automatically.

- Topology Awareness: for large multi-node jobs, network proximity matters. SLURM’s topology-aware scheduler ensures GPUs are co-located in the same fabric segment.

- Machine Lifecycle Management: we built a Kubernetes operator that enforces goal-state for every node, with CRDs storing node events and states. When a node fails a check, it can auto-remediate or alert engineers.

- DNS and Networking Scale: thousands of SSH-able pods strain service discovery. We assign each node its own Kubernetes Service to keep latency predictable and connections stable.

Given these challenges, our technical goal is straightforward: when a researcher or automated system marks a SLURM node as faulty, Slonk should automatically drain the corresponding Kubernetes node and restart its VM at the cloud provider to recover from failure.

If a node repeatedly fails health checks, it’s excluded from the SLURM pool to maintain job stability. Meanwhile, our observability system tracks all faulty nodes for investigation and long-term reliability improvements.

Why This Approach Works

Slonk simplifies cluster management across clouds. Managed SLURM setups often differ by OS, drivers, or monitoring tools, but Slonk provides a consistent environment with the same CUDA stack and observability everywhere. GPU resources can shift dynamically between training and inference by adjusting StatefulSet replicas, and Kubernetes PriorityClass lets production workloads preempt training when needed.

Researchers work the way they always have-submitting jobs with sbatch my_job.sh - while Kubernetes quietly handles node restarts, container health, and logging. SLURM manages job scheduling and quotas, and Kubernetes ensures operational stability. Together, they keep the system simple, reliable, and flexible.

What We’re Releasing

The open-source snapshot includes:

- Helm charts and container specs for the controller, login, and worker StatefulSets.

- Health-check scripts, prologs/epilogs, and cluster utilities distributed via git-sync

- Kubernetes operator for machine lifecycle management and observability (goal-state + CRDs).

- Example configuration for NFS-based shared home directories.

We’re sharing this as a reference implementation. Fork it, build on it, adapt it to your environment.

Repo: https://github.com/character-ai/slonk

Join Us

We’re hiring ML infrastructure engineers who love the intersection of HPC and cloud. If you want to design systems that scale model training and inference, accelerate researcher productivity, and make distributed systems sing, come work with us. The best infrastructure is the kind that researchers never have to think about.